Hi @grb, advice that reducing p will increase security is simply unsupported in the relevant documents directly or by implication. ![]()

I shall explain the detail on which I base this claim, and am happy to await refutation provided it is based directly on the description and discussion provided in the Argon2 introductory 2015 paper by Biryukov et al and the 2021 RFC by the same authors with S Josefsson.

Your description of a memory-limited cracking unit simply supports that Argon2 is designed to be memory hard not processor-soft. To quote the authors encapsulating p, m and t:

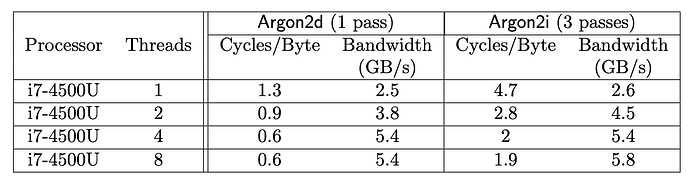

The authors provide a table illustrating the inverse relationship for available threads between reduction in CPU cycles / byte and memory transfer cycles, a key to Argon2 being the fact it is a serial process where pre-computation is essentially negated. As they said above, parallelism does not reduce cracking time proportional to cores available, one reason Argon2 is so strong. It is memory-bound rather than compute-bound.

To clarify the point that p is not a security factor, I refer to the authors’ own presentation as follows.

On Scalability, they advise that:

If reducing p could significantly improve security, double it as your calculations on a 4090 imply, why would they not even hint at this possibility in scalability?

Their comment in the same section on Parallelism is:

Allowing those experiments reflected 2015 technology in terms of exhaustion, why no hint that higher p is bad, massive p might be some sort of security disaster? Argon2 and the RFC are not old.

To summarise in their own words under the heading GPU/FPGA/ASIC-unfriendly:

Moving on to the specific recommendations in RFC 9106, we see not default or rule-of-thumb statements but three explicit recommendations (they repeat the first two recommendations under Security Considerations later):

- The first, “uniformly safe”, is p=4, m=2GiB, t=1.

- The second is if you are memory constrained for that, recommending p=4, m=64MB, t=3.

- The third is a universal model covered in items 3-11, which specify p=4, m=maximum affordable memory, t=maximum affordable running time given m.

All earlier references to p are couched as 2 * cores. If there were a notional doubling of security by setting p=2 or quadrupling with p=1 compared with 4 then I think it might have been mentioned in recommendation 2 or 3, rather than fussing over m and t with zero mention of p.

Security Considerations at the end of the RFC, before repeating recommendations 1 and 2 from above, has zero discussion or recommendations to reduce (or increase) p. If p is a relevant security factor, then why not?

The ineluctable conclusion is, because it isn’t. They said this at the beginning.

Best advice remains to set p = 2 * cores (default 4), maximise m and set t to suit. Other advice is unsupported by discussion and advice from the Argon2 / RFC authors.